I spent 7 hours reading another paper to understand more about Snowflake's internal. Here's what I found.

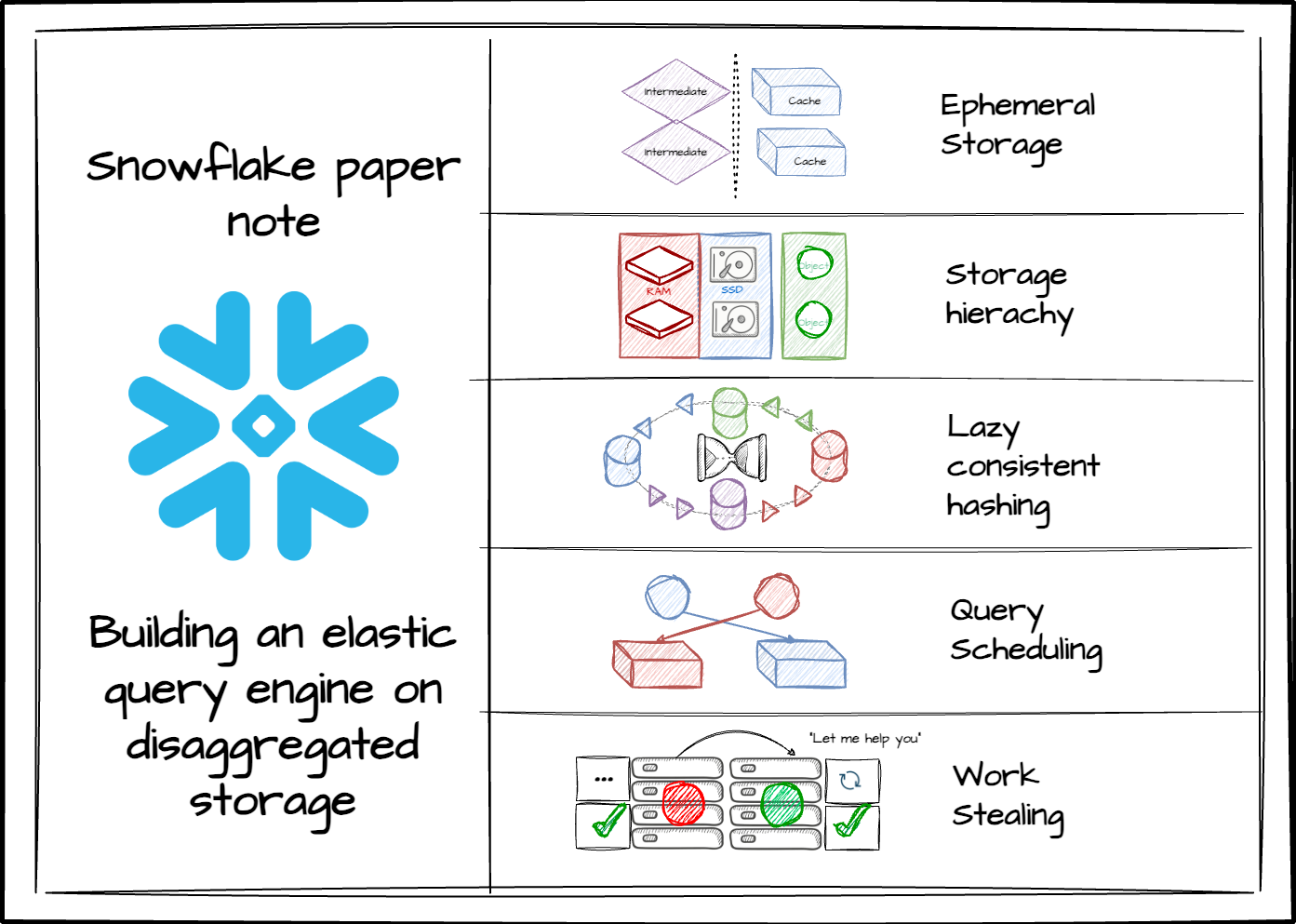

A note from Snowflake's paper: Building an elastic query engine on disaggregated storage.

Keep reading with a 7-day free trial

Subscribe to VuTrinh. to keep reading this post and get 7 days of free access to the full post archives.