Why is Text-to-SQL so hard?

Why is there a need for it? What are its challenges? Is there a way to make it easier?

I will publish a paid article every Tuesday. I wrote these with one goal in mind: to offer my readers, whether they are feeling overwhelmed when beginning the journey or seeking a deeper understanding of the field, 15 minutes of practical lessons and insights on nearly everything related to data engineering.

Intro

As Joe Reis and Matt Housley once said in the infamous book, Fundamentals of Data Engineering:

A data engineer manages the data engineering lifecycle, starting with extracting data from source systems and concluding with serving data for specific use cases.

The data serving is the primary interface through which we provide our service to end users (e.g., data analysts, data scientists, business stakeholders). No matter how well we store, process, and manage the data, if users cannot access or use it reliably, we have failed.

Today, I want to discuss one of the hottest methods for serving data in the era of AI: natural language to SQL. We will first understand why text-to-SQL is receiving a lot of attention recently, what its challenges are, and then attempt to find a solution that addresses them.

Why Text-to-SQL

In the past, if business users wanted to gain insight from the data, they had to communicate with the IT department so that these technical experts could assist them.

The business intelligence tools have evolved since then. More functionalities, a shinier UI, the ability to connect to more systems, and most importantly, more friendly to non-technical users.

From asking the technical team for help, business users can now build their own charts or create reports with the assistance of modern business intelligence tools, which allow them to drag-and-drop the data fields they want.

But it seems like that’s not enough. The rise of AI chat interfaces like ChatGPT or Gemini makes people realize that “oh, using natural language is even more productive compared to the visual drag-and-drop.“ BI tools on the market are starting to integrate the ability to answer human questions with the help of AI models.

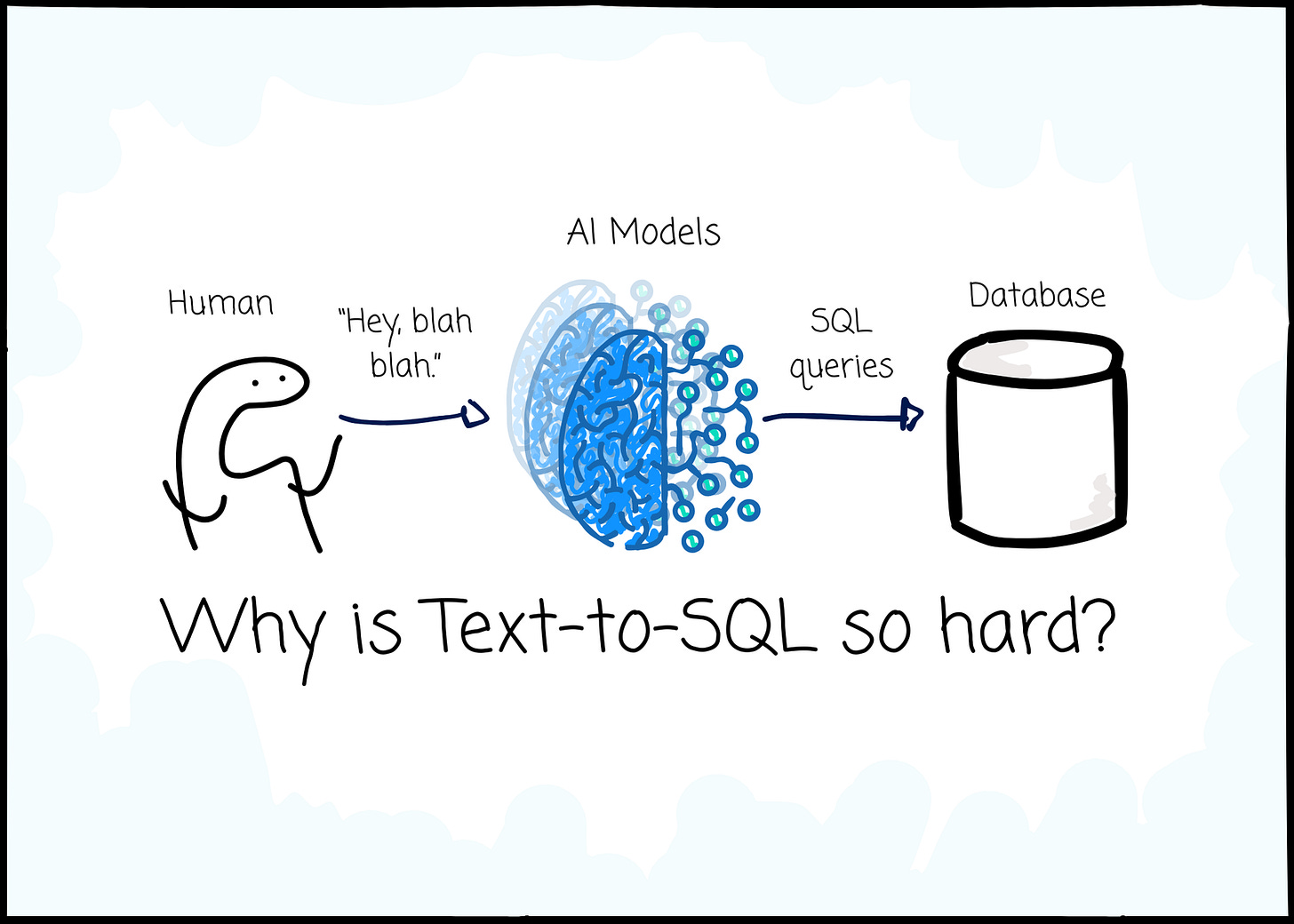

The key is to enable the AI models to translate user input into SQL queries. Then, the tool will send the SQL to the database and create a chart/report based on the results.

Instead of choosing the `total_sales` and `country` fields, a simple text, “Show me the total sales breakdown by country in the last month,” is more intuitive for the users. Integrating with AI makes a solution more compelling.

Challenges of Text-to-SQL

I refer to the paper “A Survey of Text-to-SQL in the Era of LLMs: Where are we, and where are we going?” for this section.

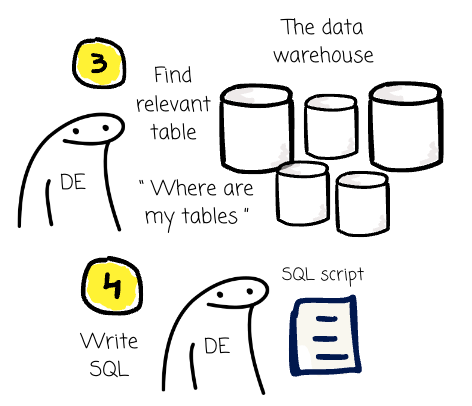

Instructing AI models to accept natural language input and output a reliable SQL query is not easy to achieve. To better understand the challenges, let’s first revisit some steps that humans take to write SQL:

We begin with the business question, the natural language query: for example, all countries with sales greater than 2,000 on Independence Day.

In our brain, we identify the entities: the countries, the sales, the context: June, and the condition: sales greater than 2,000.

We find the relevant tables, columns, and records by examining the database schema. The human interpretation is essential here, which kind of sales (assume the company has more than one product), and what date is Independence Day? (This varies in countries.) This step may require us to revisit the business users to request additional information.

Then, we write SQL based on our understanding. We Select, Join, Group By, Where…

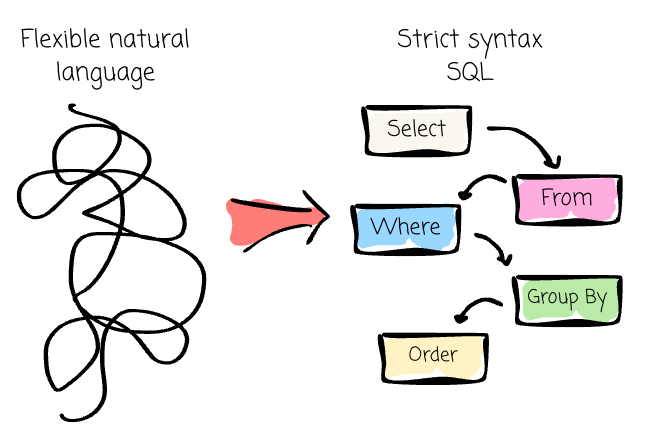

We, humans, despite knowing what we are trying to do, still have some challenging problems while handling the “text-to-SQL “ process: the uncertainty of the natural language, the database’s complexity, and the translation from the “flexible” natural language queries to the “strict” SQL queries.

Natural language uncertainty

We use natural language from the day we learn to say our first words, such as “mama” or “papa“. We practice it every day, and the way we communicate depends significantly on who we are, how we grew up, and how we perceive the world.

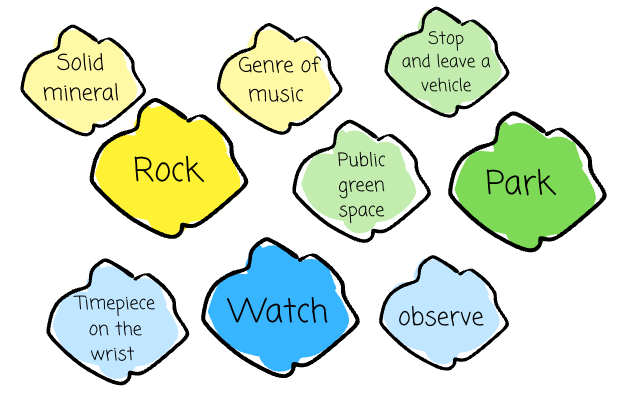

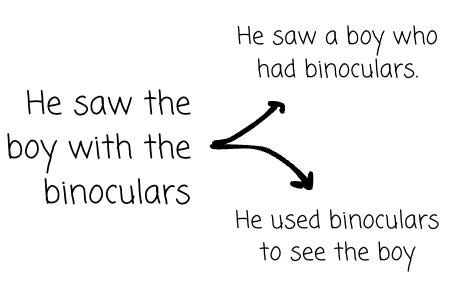

It’s normal for us to say a thing, and others understand it in different ways. This is called ambiguity. It could happen when a single word has multiple meanings, …

…or a sentence can be parsed in various ways.

The uncertainty also stemmed from under-specification, which occurs when expressions lack sufficient detail or context to convey their intended meanings. For example, Independence Day in Vietnam is different from Independence Day in the United States of America.

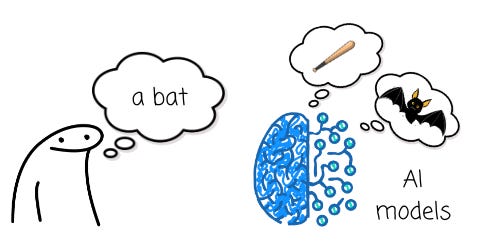

We can ask others, observe around, or leverage our experience and understanding to resolve the ambiguity. Meanwhile, the AI models might only have a natural language query.

The database’s complexity

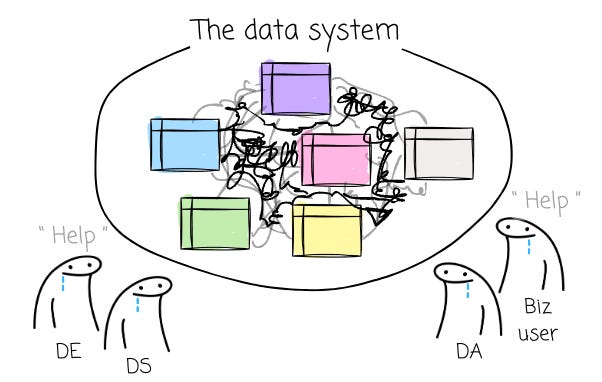

It’s common for us, data engineers, to handle messy data systems. Lack of robust data modeling, complex relationships between tables, ambiguous columns, or more than one way to calculate a metric.

Let’s confess here, it is tough for us to do the right thing the first time with this data system. We might run around the companies to ask for more clarification, cause some bugs, and create some weird reports before learning how to do it right. An AI model, somewhere on the internet, knows nothing about your company’s data system. How could we expect it to do better than us?

Text-to-SQL Translation

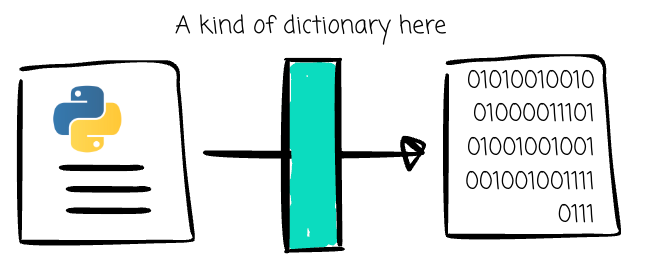

For the machine to understand, our Python or Java code must be translated into low-level machine language. This is a complex task, but at a high level, things are straightforward, as each language has a kind of dictionary to facilitate a one-to-one mapping between programming language code and machine code.

However, converting text to SQL is more challenging than that, as it typically involves a one-to-many mapping between the input natural language query ←→ database entities and natural language query ←→ SQL query.

Natural language is flexible, whereas SQL queries must adhere to a strict syntax. Even SQL queries could have different syntax depending on the standard and the database implementation.

We require not only that the queries be executable, but also that they be readable, optimized, and reliable. Placing this responsibility on the AI models seems to overwhelm them, given that they may return low-performance queries, hard-to-debug ones, inaccurate results, or multiple SQL queries for the same prompt.

This article is sponsored by Holistics, a self-service BI tool built for the AI era.

So, is there a way for us to deal with these problems?

It turns out that there is a promising approach.

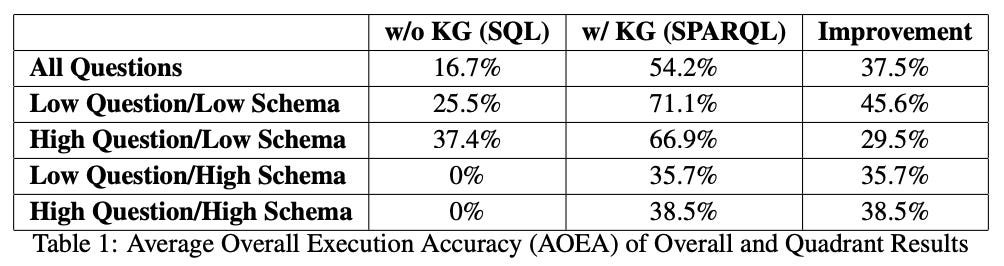

In the paper “A benchmark to understand the role of knowledge graphs on large language models’ accuracy for question answering on enterprise SQL databases”, the author created a robust benchmark series of questions with different levels of complexity using a standardized insurance dataset. They asked ChatGPT to answer the questions in two ways:

Generate the SQL directly

Generate the SQL with the help of a knowledge graph

They observed that leveraging the knowledge graph indeed helps improve the accuracy of results:

Essentially, a knowledge graph is a structured way to represent knowledge about entities and their relationships, utilizing a graph-based data model. There is a popular solution that offers the same benefit.

Yes, it is the semantic layer

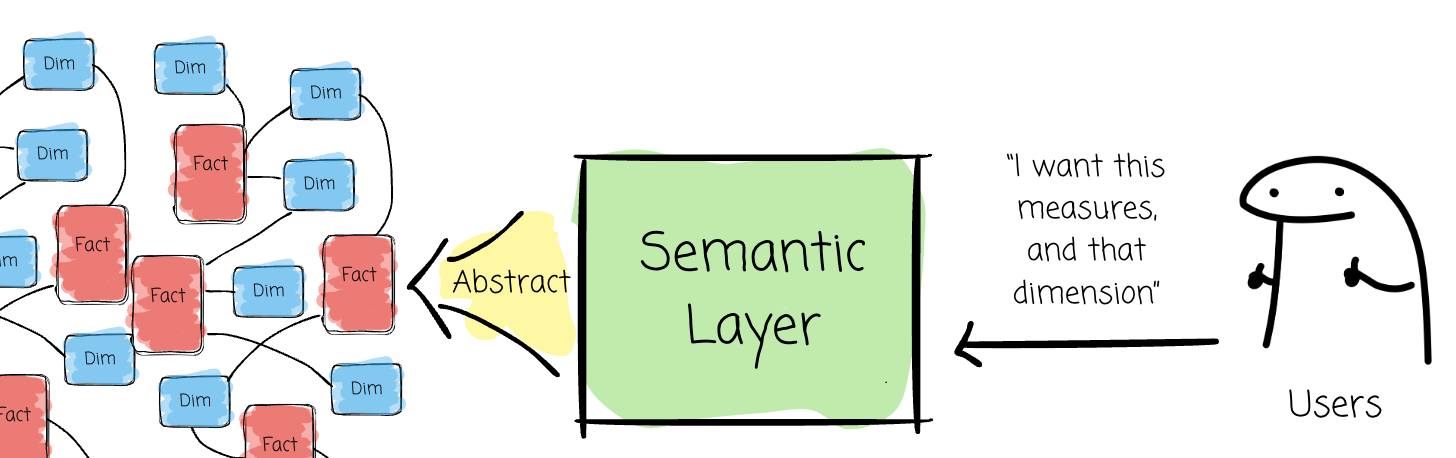

As a company’s business expands, the volume and variety of data increase; more decisions need to be made, more data must be stored, and more source data must be captured. Despite how well we prepare, data users might struggle to understand what they need to use the data effectively. We need a better abstraction layer that can lower the barrier for people.

The semantic layer is an abstraction layer that sits between the underlying data (e.g., data warehouses) and end-user applications (e.g., BI tools, data applications, or business users). From a high level, a semantic layer solution requires us to map business-friendly concepts to underlying data assets and specify the relationships between them.

Thanks to that, the layer acts as a translator between the data and its users. It abstracts all the complexity to ensure that only understandable and business-friendly concepts are presented to users.

Semantic layer’s role in Text-to-SQL tasks

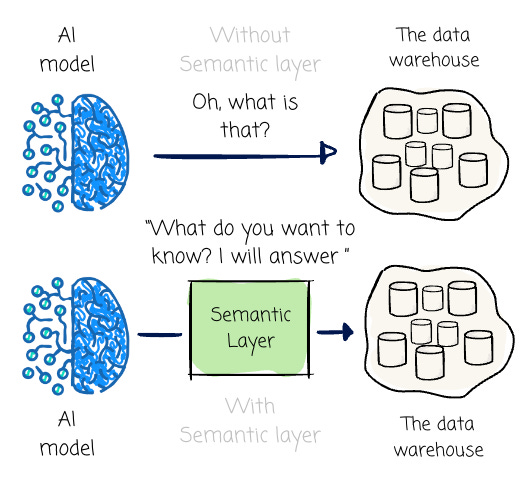

Recall that ambiguity and database complexity affect the accuracy of the text-to-SQL system. With the help of the semantic layer, the Text-to-SQL output could be more reliable:

AI models don’t need to understand the database complexity anymore, as all the information they require is baked into the semantic layer, from the tables needed to the right way to join them. In other words, an AI model is enriched with context through the semantic layer.

When a user requests “total sales,” the AI does not need to infer or guess the logic; it can simply reference the predefined “Total Sales” metric in the semantic layer, which already contains the calculation. This limits the ambiguity.

A real-world example

The semantic layer has emerged lately, given its ability to abstract the complexity of the underlying data systems. As discussed, this is not only a benefit to business users but also to the AI models. The layer is an indispensable part of modern BI tools, such as Tableau, Looker, and Power BI, as well as an interesting solution called Holistics.

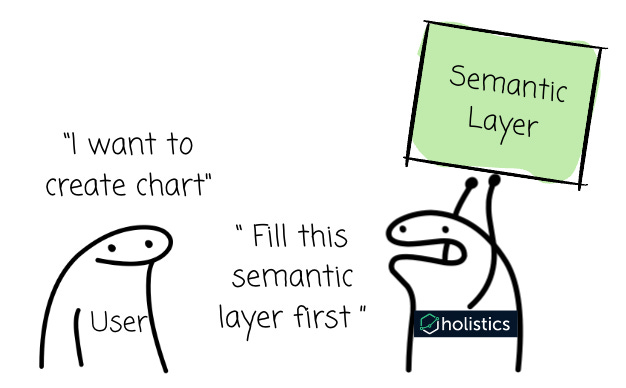

Established in 2015, the platform enables self-service data access for the entire organization. Compared to other BI tools, if users want to extract insight on Holistics, they must define their mapping between business concepts and the underlying tables via the semantic layer. Only after that, users can start presenting and organizing data using concepts exposed from the semantic layer.

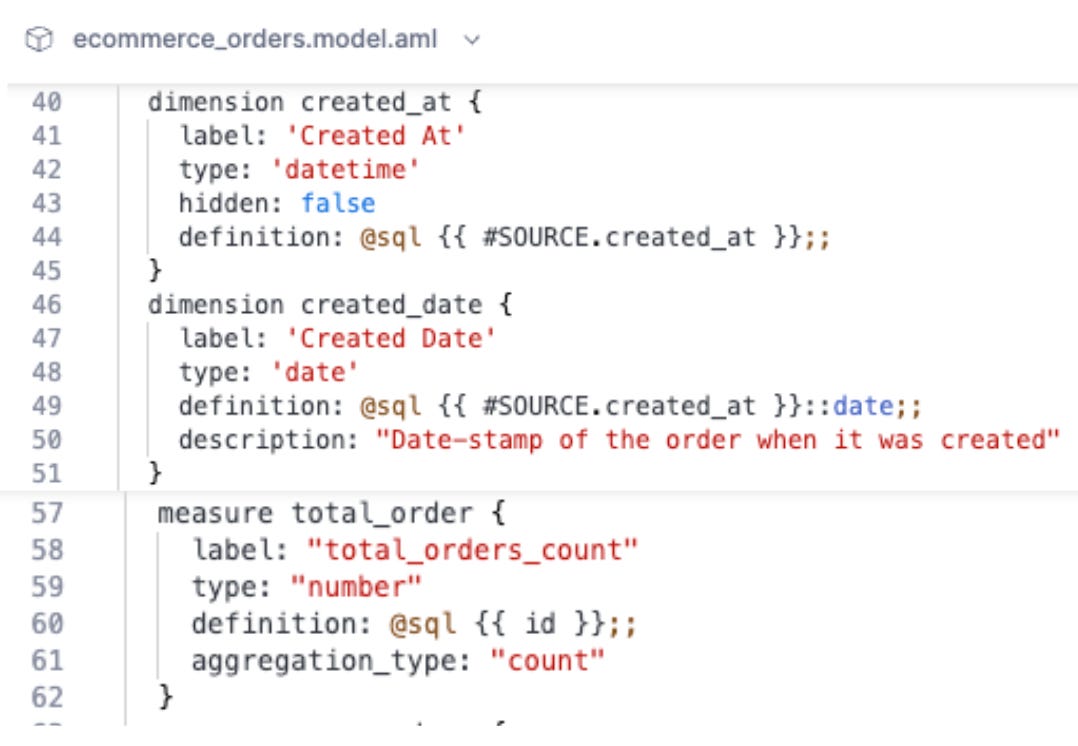

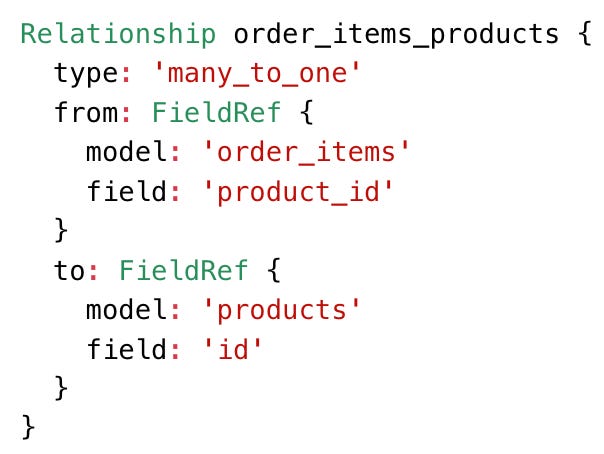

To work with the semantic layer, Holistics introduces the concept of “model“, which is an abstract representation on top of a table/query. A model should have the source (a physical table or a SQL query), the dimensions and measures, and the relationships to other models. Holistics uses relationships for constructing the join.

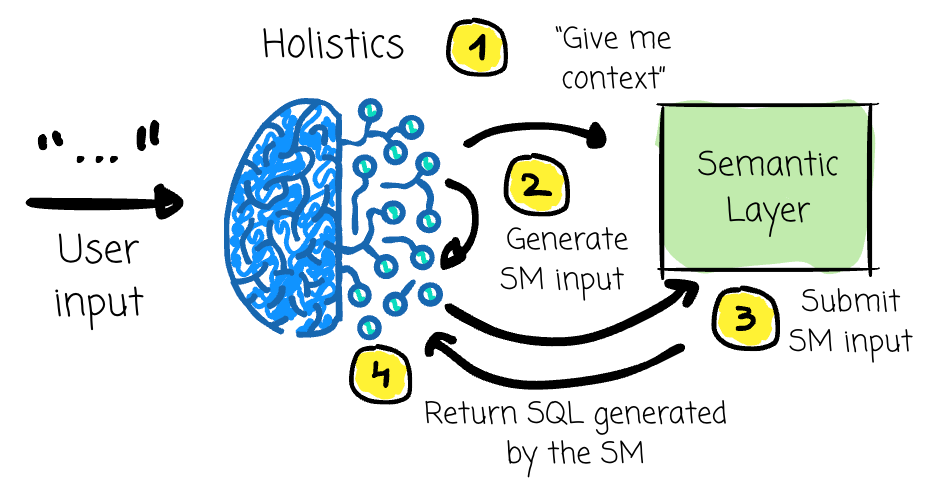

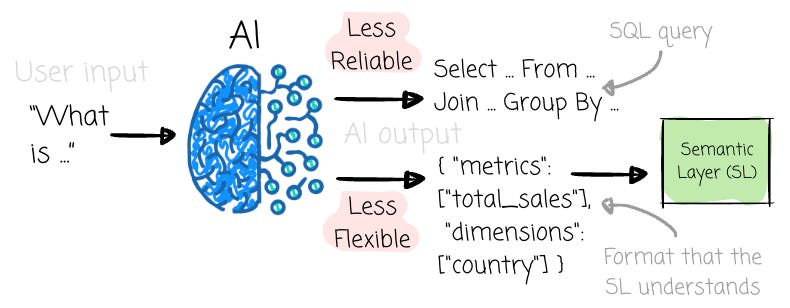

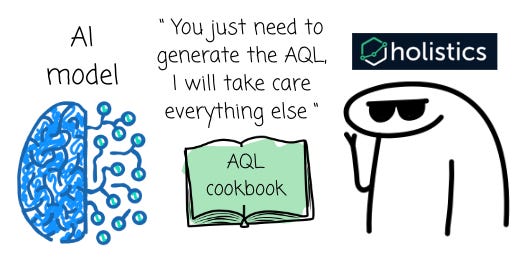

With Holistic’s vision of the semantic layer from the beginning, it would be easier for them to develop the text-to-SQL feature. They’ve tried several approaches, including letting the AI models offload the generation of SQL to the semantic layer by translating the user’s natural language input to a format that the semantic layer could understand, such as a JSON payload.

By doing it this way, the text-to-SQL process can become even more reliable, as the SQL queries are now controlled by the semantic layer, which is designed to generate output queries based on well-tested logic and predefined entities within the semantic layer. Compared to the fact that the AI model has to guess, this way is more reliable.

Even with the semantic layer, it might not be enough for text-to-SQL

Although relying entirely on the semantic layer could be beneficial, this approach may be limited by the fact that the input format, such as JSON, doesn’t provide users with the necessary flexibility in cases of complex analytics requirements.

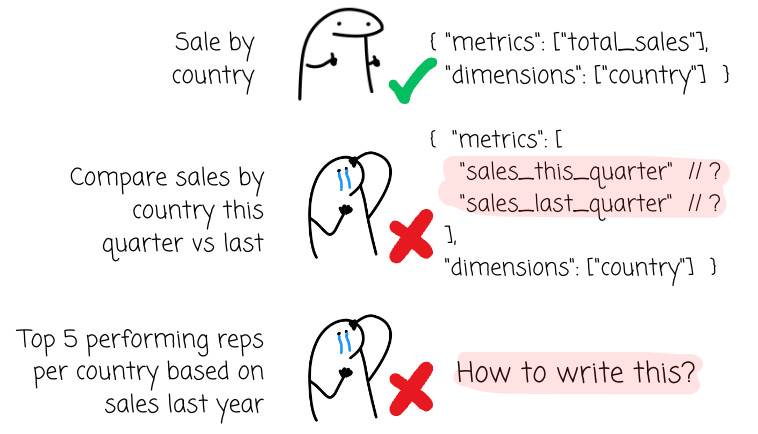

For example, with the pseudo-format like this:

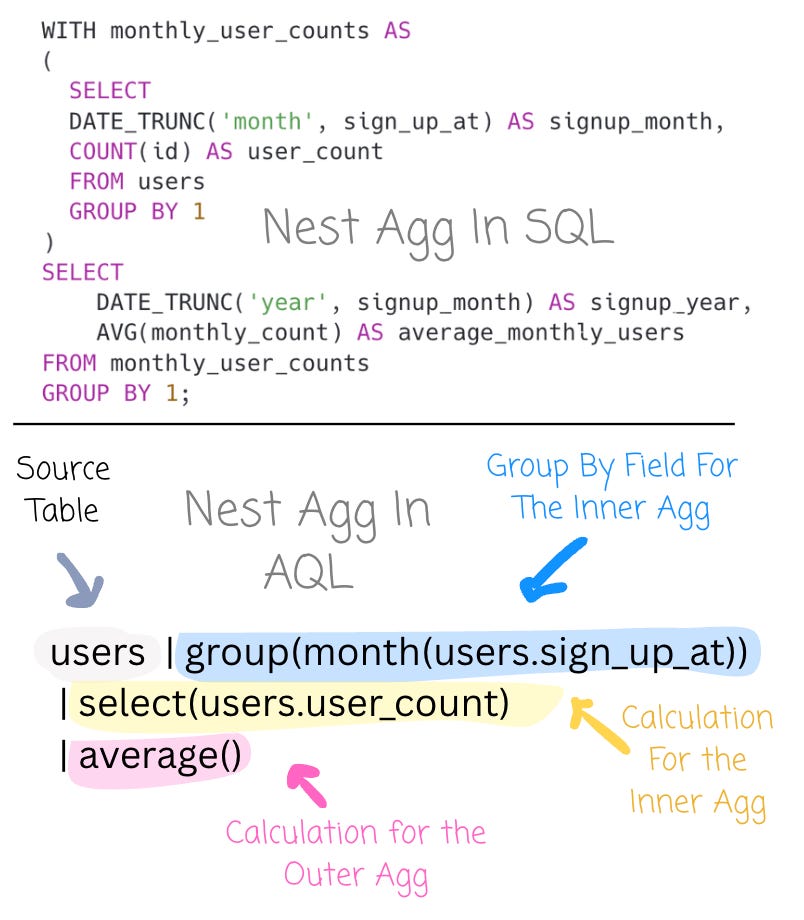

{ "metrics": ["total_sales"], "dimensions": ["country"]}It serves well for simple questions. However, the key-value formats could cause users trouble when expressing queries that require more advanced techniques, such as nested aggregation or period-over-period comparison.

So, letting the AI model generate the SQL directly is less reliable, but interacting via the semantic layer with the intermediate format is less flexible. What do we do?

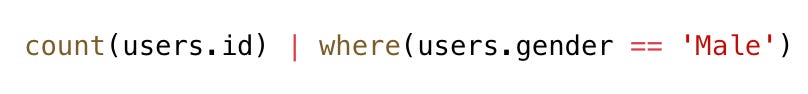

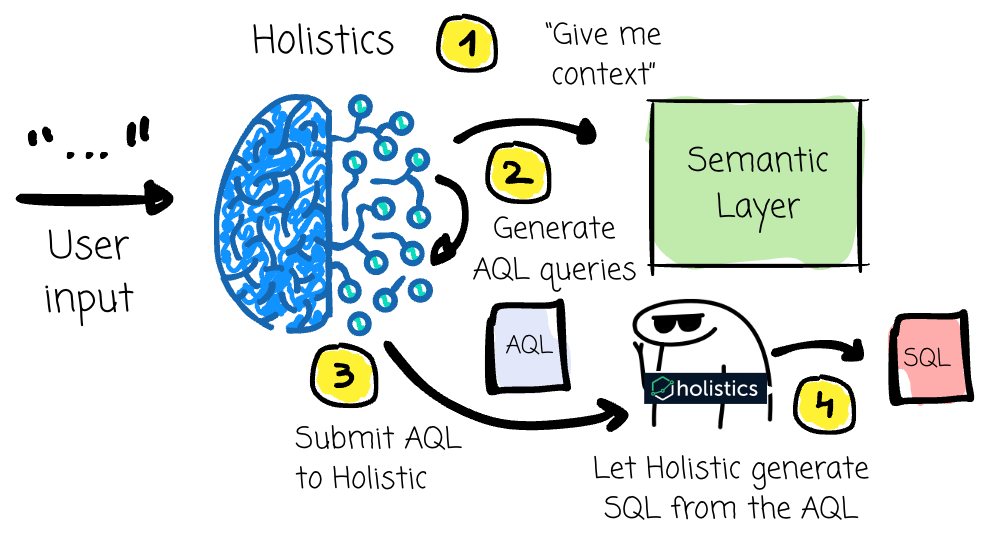

Holistics chooses to let the AI model generate the queries, but in a more reliable and controllable way. The model still leverages the help of the semantic layer for the business context and understanding; however, it has been trained to generate a new kind of query language instead of SQL. They call this AQL. s. Let’s delve into this language before moving on.

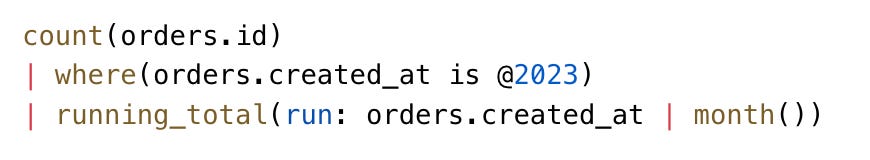

The AQL language

When the platform was first built, the creator behind Holistics had already developed a proprietary language for analytics, known as AQL. This language is designed to leverage the defined semantic layer, allowing us to query data at a higher level of abstraction.

AQL treats metrics as first-class citizens, making metric definition composable and reusable. This differs from SQL, where everything is a query. If you want to reuse a piece of metrics, you must save the query that calculates it somewhere, such as in a CTE, a view, or a table. When adjusting the metric logic, you must modify the query.

AQL queries are written using business concepts (dimensions and measures) defined in the semantic layer, not raw table and column names. A user can ask for `total_revenue` by `user_country` without having to write the complex JOIN statements. This abstraction simplifies query writing and drastically improves the readability and maintainability of analytics code.

Additionally, AQL introduces the pipe operator |, which takes the result of the expression on its left and uses it as the input for the function on its right. This creates a clear, sequential, top-to-bottom flow of logic.

Users express their metrics using AQL; then, Holistics converts them to SQL queries and executes them on the defined database.

The solution

Back to Holistics, the way they build the text-to-SQL will look like this: they trained their AI models to accept natural language input and output the AQL queries with the help of the semantic layer. The AQL query is then converted to a SQL query.

The outcomes are AI-generated queries that are fundamentally more verifiable, reliable, and governed than those produced by systems that attempt direct text-to-SQL translation:

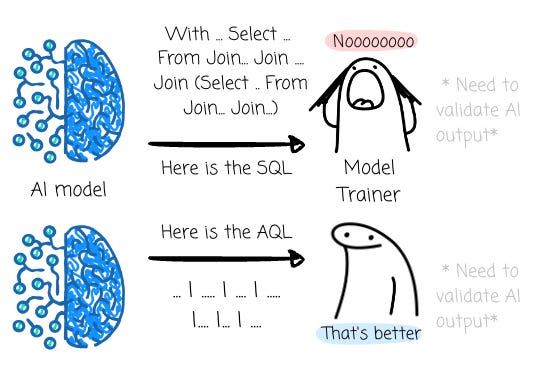

Verifiable & Readable: Because AQL is a high-level language that operates on business logic, the queries it generates are far more compact and intuitive than raw SQL. A user can look at a piped AQL query and immediately understand the logical steps the AI is taking and ensure that AI really gets what the intent of the question is about

This human-readability is critical for verification; it allows the model trainer or the end users to understand what the AI is doing. This is an improvement compared to spending time reading messy SQL queries.

The high level abstraction AQL provides reduces risks of errors and hallucination as compared to the risk of AI errors from interpreting and using low level SQL queries from scratch.

Because the AQL-to-SQL conversion is managed by Holistics’ well-tested system, the generated SQL query is guaranteed once the AQL is correct.

Reliable: By abstracting away the most error-prone aspects of query generation—such as dialect-specific syntax, complex join logic, and the formulas for advanced analytics—the system significantly increases its reliability.

The AI’s task is simplified to mapping intent to predefined metrics and dimensions in AQL. This leads to more accurate and dependable results.

Governed: Because every AQL query must operate through the semantic layer, it automatically inherits the organization’s single source of truth for business definitions.

The AI won’t invent its metric calculations. Furthermore, access controls defined in the semantic layer are automatically enforced, ensuring that users can only query data to which they are authorized.

Flexibility: AQL is designed to express complex metrics seamlessly, including AI; the capability of a text-to-SQL system will not be limited to simple queries only due to the limitation of the intermediate format, such as JSON.

Outro

In this article, we first explore why extracting data insights using natural language is gaining increasing attention. Next, we examine the challenges of Text-to-SQL and find out that there is a promising solution to improve the accuracy with the help of the semantic layer.

Finally, we examine a real-life example: Holistics, which understands its solution to Text-to-SQL by leveraging semantic layers and its self-developed analytics language, AQL.

Thank you for reading this far. See you next time.

Reference

[1] Phuc Nguyen, The Ideal Semantic Layer and Metric-Centric Paradigm, 2023

[2] Tan Huynh, Metrics Deserve Better Composition Than What SQL Allows, 2024

[4] Holistics Official Documentation

[5] Justin Heinze, History of Business Intelligence, 2020

Regarding the topic, what ethcal challenges arise with AI Text-to-SQL? Very insightful.