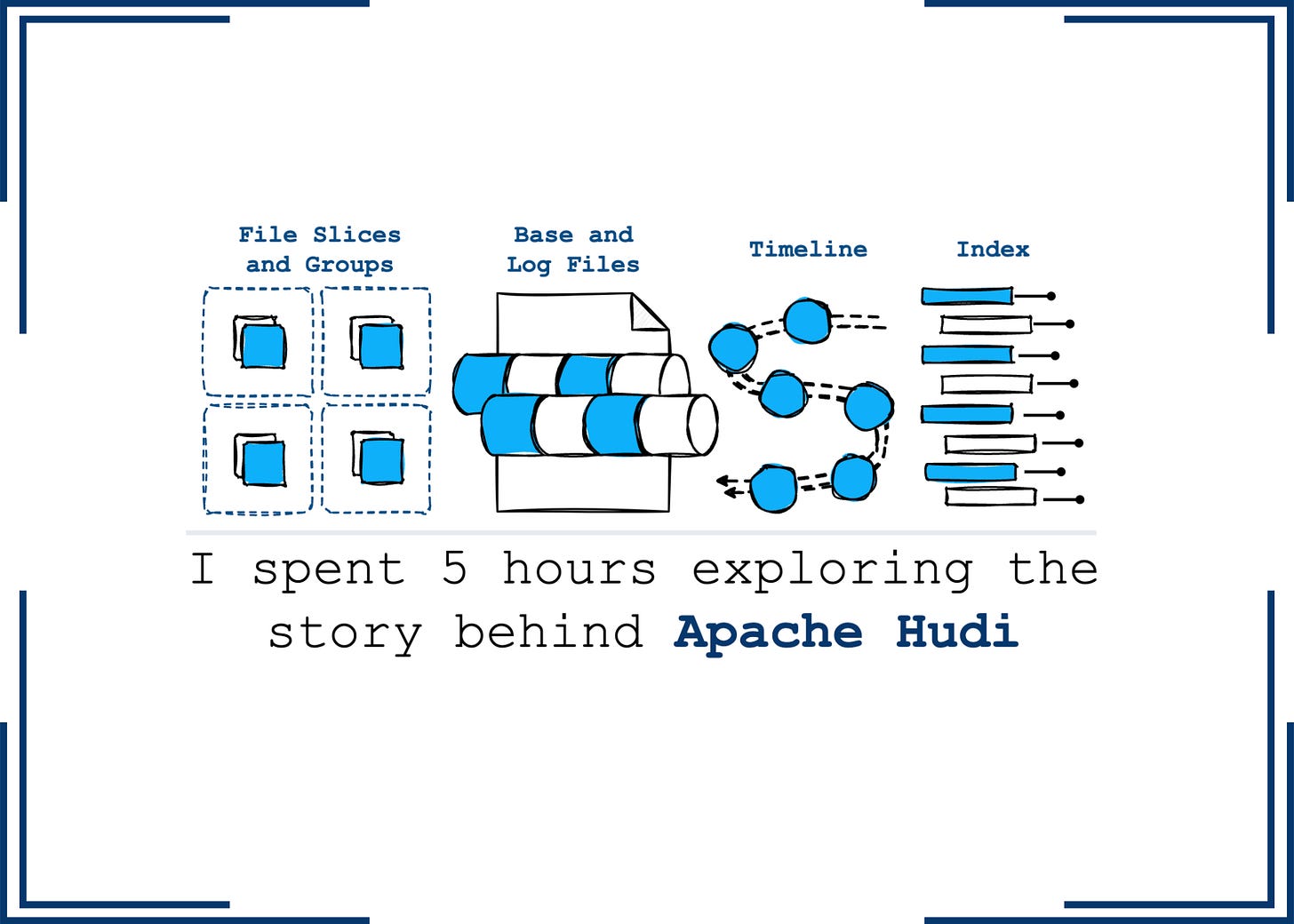

I spent 5 hours exploring the story behind Apache Hudi.

Why did Uber create it back then? What makes Hudi different from Iceberg or Delta Lake?

Keep reading with a 7-day free trial

Subscribe to VuTrinh. to keep reading this post and get 7 days of free access to the full post archives.